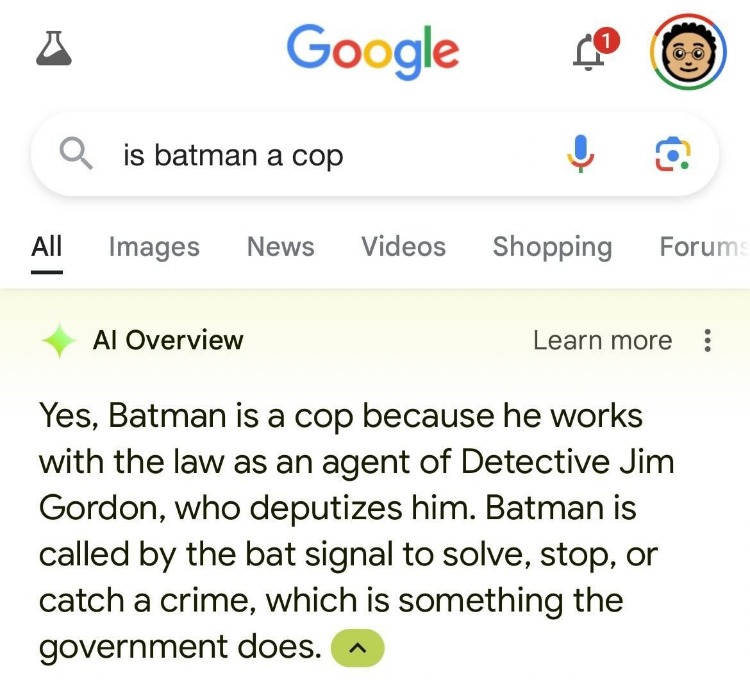

Google's newly launched "AI Overview" feature, designed to provide concise summaries and key information at the top of search results, has come under fire for delivering inaccurate, misleading, and potentially harmful information to users. Here are the critical aspects of the issue:

Inaccurate and Absurd Responses

The AI Overview feature, intended to streamline information access, has been criticized for presenting false and often absurd information. Notable errors include:

- Incorrectly identifying former President Barack Obama as a Muslim.

- Falsely claiming that no African countries begin with the letter 'K'.

- Suggesting the addition of Elmer's glue to pizza sauce.

These inaccuracies appear to stem from satirical or misinformative sources rather than credible ones, leading to significant user confusion.

Google's Response

In response to the backlash, Google has acknowledged the errors and taken steps to remove the problematic AI Overview results. The company insists that it conducts extensive testing and is using these incidents to enhance its AI systems. Despite these efforts, the mishaps have eroded user trust in Google's search engine, a platform many rely on for accurate information.

Google's other mistakes in AI

This is not Google's first encounter with AI-related issues. The company has previously faced criticism over:

- The Bard chatbot, which provided incorrect information.

- The Gemini image generator, known for producing historically inaccurate depictions.

These recurring problems underscore the broader challenges Google faces in safely integrating experimental AI technology into its core products.

Industry experts emphasize that while it is crucial for Google to remain competitive in the fast-evolving AI landscape, this drive for innovation must be tempered with a commitment to reliability. Maintaining the search engine’s trusted reputation is essential. The recent inaccuracies in the AI Overview feature highlight the inevitable growing pains that come with integrating cutting-edge technology.